Overview

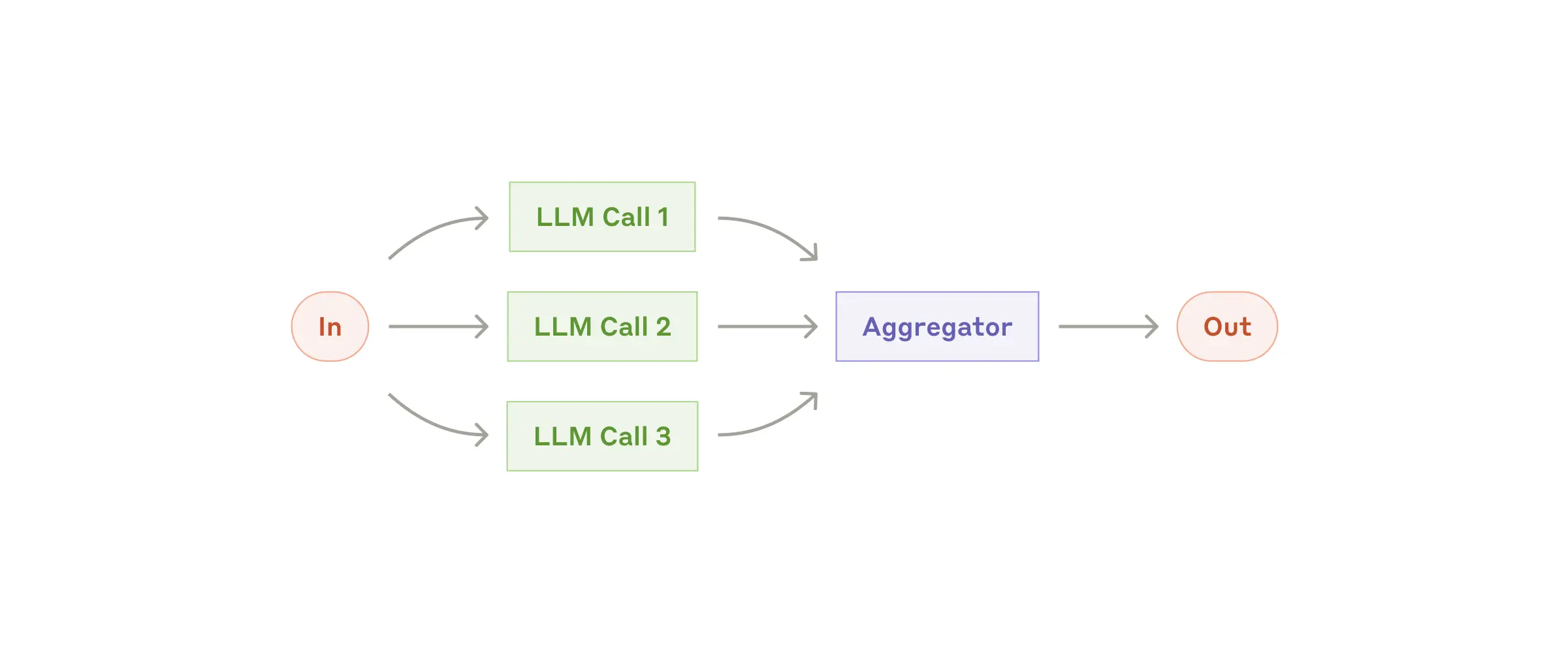

The Parallel Workflow pattern uses a fan-out/fan-in approach where multiple agents work on different aspects of a task simultaneously, then a coordinating agent aggregates their results.Complete Implementation

The Parallel workflow is ideal for tasks requiring multiple specialized perspectives simultaneously. Here’s a complete example using a student assignment grader:Basic Setup

Configuration Options

You can customize the parallel workflow behavior:Key Features

- Fan-Out Processing: Distribute work across multiple specialized agents

- Fan-In Aggregation: Intelligent result compilation and synthesis

- Concurrent Execution: All fan-out agents work simultaneously

- Specialized Roles: Each agent focuses on specific expertise areas

- Structured Output: Coordinated final result from aggregator agent

Use Cases

Content Review and Grading

Perfect for academic or content evaluation where multiple criteria need simultaneous assessment:- Grammar and style checking

- Factual accuracy verification

- Compliance with guidelines

- Technical quality assessment

Multi-Domain Analysis

Analyze complex topics from different expert perspectives:- Financial reports (risk, compliance, performance analysis)

- Legal documents (regulatory, contractual, liability review)

- Medical cases (diagnosis, treatment, side effects)

- Technical specifications (security, performance, usability)

Quality Assurance

Parallel validation across different testing criteria:- Code review (security, performance, maintainability)

- Product testing (functionality, UI/UX, accessibility)

- Data validation (accuracy, completeness, consistency)

Research and Information Gathering

Simultaneous information collection from specialized sources:- Market research (competitor analysis, trend analysis, customer feedback)

- Literature review (multiple databases, different methodologies)

- Due diligence (financial, legal, technical assessments)

Setup and Installation

Clone the repository and navigate to the parallel workflow example:mcp_agent.secrets.yaml:

Full Implementation

See the complete parallel workflow example with student assignment grading use

case.