Overview

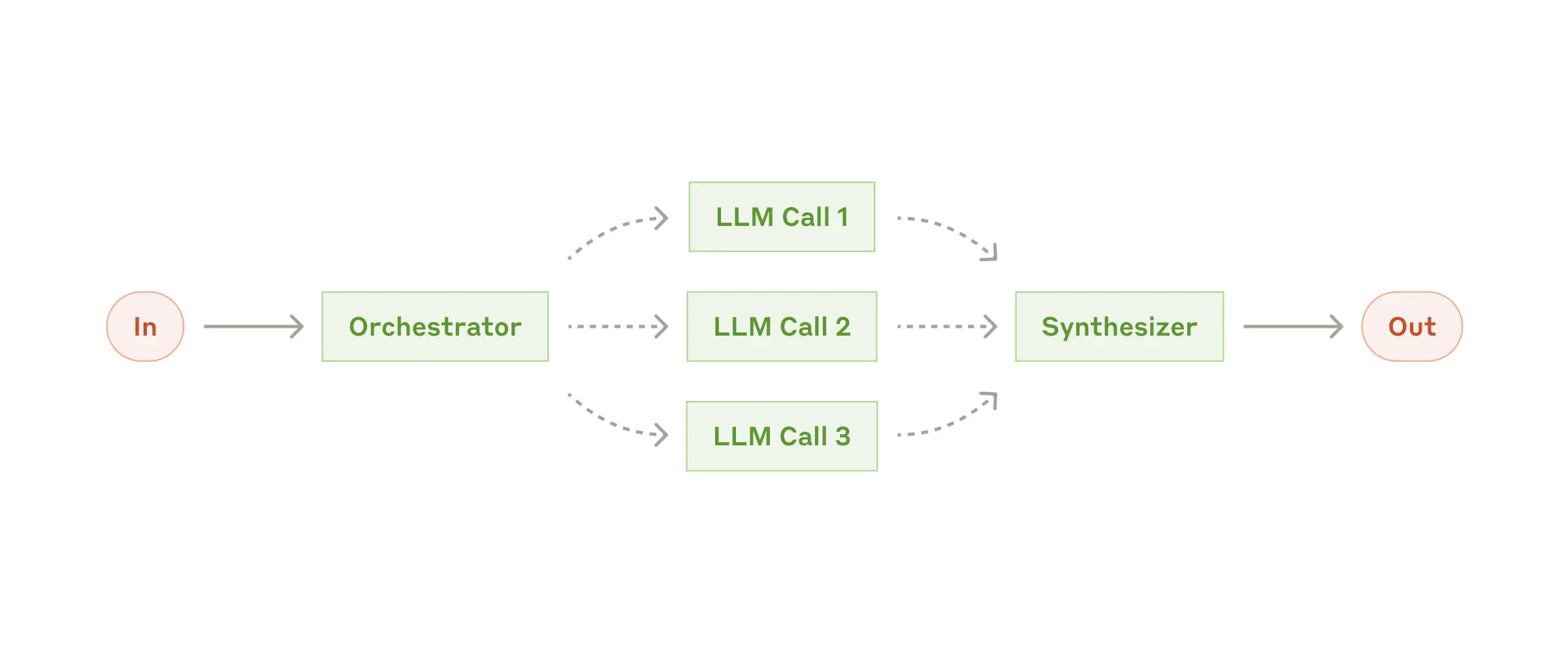

The Orchestrator pattern handles complex, multi-step tasks through dynamic planning, parallel execution, and intelligent result synthesis. It breaks down objectives into manageable steps and coordinates specialized agents.Complete Implementation

The Orchestrator workflow handles complex multi-step tasks through dynamic planning and coordination. Here’s a comprehensive implementation:Basic Orchestrator Setup

Advanced Planning Strategies

The orchestrator supports different planning approaches:Full Planning

Generate complete execution plan upfront:Iterative Planning

Plan one step at a time, adapting based on results:Configuration and Monitoring

Key Features

- Dynamic Planning: Breaks down complex objectives into manageable steps

- Parallel Execution: Tasks within each step run simultaneously

- Iterative vs Full Planning: Choose between adaptive or upfront planning

- Context Preservation: Previous results inform subsequent steps

- Intelligent Synthesis: Combines results from multiple specialized agents

Planning Modes

Full Planning

Iterative Planning

Use Cases

Complex Development Projects

Handle multi-step software development tasks with dependencies:- Code Refactoring: Analyze codebase, identify issues, implement fixes across multiple files

- Feature Implementation: Requirements analysis, design, implementation, testing, documentation

- Bug Resolution: Reproduce issue, analyze root cause, implement fix, verify solution

- CI/CD Pipeline Setup: Configure build scripts, set up testing, deploy infrastructure

Research and Analysis

Coordinate comprehensive research workflows:- Literature Reviews: Search databases, analyze papers, synthesize findings, write summaries

- Market Research: Gather competitor data, analyze trends, survey customers, compile reports

- Financial Analysis: Collect financial data, perform calculations, generate insights, create presentations

- Due Diligence: Legal review, technical assessment, financial audit, risk analysis

Content Production

Multi-stage content creation with quality assurance:- Technical Documentation: Research topic, write content, review accuracy, format for publication

- Marketing Campaigns: Market analysis, content creation, design assets, campaign testing

- Academic Papers: Literature review, data analysis, writing, peer review, revision

- Product Launches: Requirements gathering, specification writing, testing, documentation

Operations and Automation

Complex operational workflows requiring coordination:- Incident Response: Alert analysis, impact assessment, resolution planning, implementation

- Compliance Audits: Data collection, policy review, gap analysis, remediation planning

- System Migrations: Current state analysis, migration planning, execution, validation

- Performance Optimization: Monitoring analysis, bottleneck identification, optimization implementation

Setup and Installation

Clone the repository and navigate to the orchestrator workflow example:mcp_agent.secrets.yaml:

mcp_agent.config.yaml:

- Load the story from

short_story.md - Run parallel analysis (grammar, style, factual consistency)

- Compile feedback into a comprehensive report

- Write the graded report to

graded_report.md

Expected Output

The orchestrator generates a detailed execution plan and produces output similar to:- Grammar and spelling corrections

- Style adherence feedback based on APA guidelines

- Factual consistency analysis

- Overall assessment and recommendations

Full Implementation

See the complete orchestrator example with student assignment grading workflow.